Artificial Life: A Technoscience Leaving Modernity?

An Anthropology of Subjects and Objects

Lars Christian Risan

With a foreword by Inman Harvey

TMV-senteret 1997

|

To download, print, or bookmark, click: http://www.anthrobase.com/txt/Risan_L_05.htm.

|

For letting me be part of their works and lives for 8 months during 1994, for great intellectual inspiration, and for many good times, thanks to the employees and the students of School of Cognitive and Computing Sciences at the University of Sussex. Special thanks to:

| Inman Harvey Michael Wheeler Seth Bullock Philip Jones Paulo Costa Horst Hendriks-Jansen Ronald Lemmen Fred Keijzer Ron Chrisley |

Phil Husbands Peter de Bourcier Adrian Thompson Pranath Fernando Eevi Beck Christian Mullon Matthew Elton Jim Stone |

Dave Cliff Guillaume Barreau Stephen Eglen Geoffrey Miller Arantza Etxeberria Robert Davidge Margaret Boden Chris Thornton |

Special thanks to Henrik Sinding-Larsen and Claus Emmeche for guiding my first steps into the Artificial Life community.

For supporting, inspiring, and critical comments to the many drafts that have led to this thesis, thanks to: Henrik Sinding-Larsen, Geir Kirkebøen, Stefan Helmreich, Maria Guzmán Gallegos, Hendrik Storstein Spilker, Helge Kragh, Leif Lahn, Eevi Beck, and Kari-Anne Ulfsnes.

For being a source of creative ideas, and for providing accurate and patient corrections to a lot of inaccurate and sometimes quite far fetched writing, special thanks to my supervisor Finn Sivert Nielsen.

Many thanks to Mary Lee Nielsen for her thorough proof-reading of the thesis.

Financial support for my fieldwork was provided by The Norwegian Research Council NFR (102542/530). The Centre for Technology and Culture (TMV-senteret) has provided me with logistic, economic, and moral support. Many thanks.

Special thanks to my parents, Ragnhild Risan and Ernst Olav Risan, for giving me a Real Life with love.

One of the themes of this thesis is how researchers use some sort of "quotation marks" when uttering or writing particular words. Emic terms are therefore set in italics, for example; artificial evolution. When the normal practice of the Artificial Life researchers was to add quotation marks to a particular word (possibly mimicking them in the air when speaking), I have marked this by adding quotation marks and italics to the word, for example; the "brain" of a robot. When words are set in quotation marks without italics, I am the one who makes reservations on the literalness of the word, not they.

Transcripts of taped interviews are marked off from regular quotations of written works by indent and italics.

This report is a publication of my Cand. polit. thesis in anthropology at the University of Oslo. Some minor corrections have been made, mostly concerning the language. In line with the recommendations of the examining commission, some persons have become more visible in this revised text. E.g., some instances of "an ALifer said" have now become a more proper subject, even if this person may be anonymised. Hopefully, I have in this way avoided some inaccurancies that were perceived as doubtful generalisations. Many thanks to all those who have read the thesis and made valuable comments. These corrections aside, the overall content of this report is similar to the thesis. Thanks to the Norwegian Research Council NFR and to the Centre for Technology and Culture for supporting the publication of this report.

Lars Risan

Oslo, September 1997

I come from a small tribe of Artificial Life researchers, our particular village based at the University of Sussex. One day we had a visitor from a different culture, who asked if he could study us and learn our ways. We like to show respect (at least initially...) to strangers, so we agreed; the unspoken bargain was that if he did not respect our culture and our goals then we would put him in the cooking pot and eat him.

Well, Lars Risan stayed many months, and became a friend and a colleague, and we didn't have to eat him. Many of us have travelled in (literally) distant lands and met people whose culture, day-to-day concerns, and language, are apparently so different from ours as to make communication minimal; but so often some shared event or worry or problem shows that we do indeed have common interests that bridge the gap between our cultures. It became clear very soon that some of Lars' concerns as a cultural anthropologist echoed our own as A-Life researchers - in particular, concerns about the reflexivity and objectivity of our respective research programmes - and a dialogue started which is reflected in parts of this book.

A-Lifers have to get to grips with what it means for anything to be alive - anything from a bacterium to a tree, from a human to (potentially) a robot. One theme that some of us use is to relate Life to Cognition (in a broad sense): living creatures know their world, know what has significance for them, through their interactions with it, and without a world of meaning for X, X cannot be alive. As humans, as scientists, we ourselves inhabit a world of words and of theories, where through interchange of ideas we try to find a common language through which we can make sense of our fields of study: common sense, objective or inter-subjective agreement. The language of objectivity usually implies that we can stand apart from our field of study as impartial, godlike observers, but above all here we must recognise that we ourselves, our modes of understanding, are inextricably linked with our subject matter.

Lars, of course, has a comparable problem in applying the cultural norms of anthropological research to the study of different cultures; above all a culture where we have opinions about his task. As Lars says, we were from his perspective often colleagues as well as informants, and the same holds true from our perspective - his research has relevance to our own concerns. I have gained immensely from his thoughtful analysis of the problems of objectivity and reflexivity that we all face.

As an interested participant, I can recommend his study as being extremely fair and insightful regarding the attitudes and beliefs, the conflicts and debates within our community. Yes, it all rings true, Lars understood and respected our ways; I am glad we didn't have to eat him.

Inman Harvey

Sussex, September 1997

We are attending a seminar at COGS - School of Cognitive and Computing Sciences - a school at the University of Sussex, where I conducted the major part of the anthropological fieldwork on which this thesis is based. One Artificial Intelligence (AI) researcher defends a characterisation of human beings that he labels "Man-the-Scientist". He claims that human beings, generally, can be understood as a "scaling down of the scientist". Humans, he argues, are fundamentally rational beings. Even in the play of small children we find elements of logical inductions and deductions. If we want to understand intelligence we have to understand these rational, logical patterns. This can be done by making computer programs that exhibit this rational behaviour and that follow the same logical rules as human beings. It can also be done by studying the human brain, the place where these rational processes occur naturally.

The opponent AI-researcher argues that this view of intelligence is really a defence of a Judea-Christian conception of the "supreme man", the unique man securely situated "above" all other living creatures, given his privileged position by the fact that he is "intelligent". Rather, the opponent argues, human intelligence, including our ability to use language and to think logically, is part of a much larger phenomenon - the ability of all animals, or perhaps even of all life, to behave in an "intelligent" fashion, to act as cognitive creatures. These cognitive abilities, the researcher claims, are dependent on the creature's body and its interaction with its environment. Cognition, he says, is embodied and embedded.

The second researcher works in a new scientific field that is known as Artificial Life, called, for short, ALife. The first researcher upholds a view known as Good Old Fashioned Artificial Intelligence, or GOFAI. He defends a philosophical or scientific position that Artificial Life research at COGS was a reaction to. In doing this he represented the academic "significant other" to the Artificial Life research at COGS.

The ideas and practices of Artificial Life research, and the interactions between these ideas and practices, are the topics of this thesis. How can the study of life, which ALife researchers see as pregiven by Darwinian evolution, be combined with the study of the artificial, which they see as "man made"? What implications do the combination of "artificial" and "life" have on how they practise their science? We will see that this combination makes Artificial Life a blend of a traditional naturalistic science and what they themselves sometimes call a postmodern science.

A brief introduction to the field of Artificial Life research

The term "Artificial Life" was coined in 1987 by an American scientist, Chris Langton, working at Santa Fe Institute, a scientific centre in New Mexico, USA. The field got off to a good start at a workshop that Langton arranged in Santa Fe, and was confirmed two years later by a second workshop, "Artificial Life II", and by the publication of the proceedings of the first workshop; Artificial Life. The proceedings of an interdisciplinary workshop on the synthesis and simulation of living systems (Langton 1989).

In the introduction to these proceedings, Langton defines Artificial Life as "...the study of man-made systems that exhibit behaviours characteristic of natural living systems." (Langton 1989b:1) These "man-made systems" are usually computers and robots.(1) I have called Artificial Life a "field" and not a "discipline" because of the interdisciplinary nature of the research. People come to Artificial Life from such disciplines as computer science, philosophy, psychology, biology and physics. Unlike biology proper, where researchers tend to get involved in minute details within biological sub-disciplines, ALife researchers are interested in life at its most general; its origin, its fundamental properties, its distinction from "not-life". Thus, Artificial Life has much in common with theoretical biology or with what is traditionally known as natural philosophy, the general inquiries into what life is and how it can be understood.

In 1991 Francisco Varela and Paul Bourgine arranged the first European Conference on Artificial Life (ECAL 91). In their introduction to the proceedings of this conference Varela and Bourgine define Artificial Life research as part of a line of research and thought which "...searches the core of basic cognitive and intelligent abilities in the very capacity for being alive." (Varela and Bourgine 1992:xi) According to this definition, Artificial Life research is, strictly speaking, not a study of the general properties of life, but a study of the general properties of "cognitive and intelligent abilities". Varela and Bourgine search for these properties "in the very capacity for being alive." Being "alive" is thus defined as having "cognitive and intelligent abilities"; life processes are also cognitive processes. This is the main message of one of the papers in the proceedings. The paper is called Life = Cognition (Stewart 1992).

In their introduction Varela and Bourgine emphasise that Artificial Life is part of a longer line of thought. As many other ALife researchers do, they trace Artificial Life research back to the advent of Cybernetics in the late 1940's (1992:xi). Norbert Wiener defined Cybernetics as "control and communication in the animal and the machine." (Wiener, 1948) Practitioners of cybernetics in the late 1940's, as ALife researchers now, were occupied both with making life-like or intelligent computers and robots, and with understanding life and cognitive or mental phenomena. The emphasis that the ALifer in the story above placed on seeing cognition as embodied and embedded, that is, as an aspect of a larger system than the human brain, is something that he shares with many cyberneticians. Gregory Bateson, an anthropologist deeply involved in cybernetics, writes that "the mental characteristic of the system is immanent, not in some part, but in the system as a whole" (Bateson 1972:316). During the 1950's the cybernetics movement fragmented. Some social scientists, with Bateson as a leading figure, started to apply the systemic perspective to social systems. This "social cybernetics" became particularly popular within family therapy (see for example Bateson et. al. 1956). Within engineering, cybernetics became a technique for making control systems (such as thermostats or goal seeking missiles). The discipline that combined the human/biological interest with the technical interest of the early cyberneticians became known as Artificial Intelligence (AI), today often referred to a bit ironically as GOFAI. The practitioners of this discipline distanced themselves from cybernetics. They rejected the holism of the systemic perspective and emphasised the formal and logical aspects of human cognition. The advent of Artificial Life research at places such as COGS is thus a reintroduction of the early cybernetical notions in contemporary artificial intelligence research.

ALife-researchers called themselves ALifers and, as a whole, the ALife community. The term "ALifer" was invented a bit as a joke at the first Santa Fe workshop in 1987. The term "ALife community" derives from the English designation of scientific communities (you also have the "anthropological community"). I first learned that Artificial Life existed from a book on the topic, written by the Danish biologist and philosopher of science, Claus Emmeche(2) (Emmeche 1991). I wrote to Emmeche to find out where the people studying Artificial Life actually were, and the ball started rolling. I soon learned that the ALife community consisted of people in many universities and research institutions in Europe, North America and Japan. These researchers communicated mainly through a channel I at that time knew nothing about, electronic mail. In addition they met a couple of times a year at international conferences on Artificial Life and related topics. Both in order to learn more about Artificial Life and to find a suitable location for an anthropological fieldwork I set out on two journeys in 1993. In May I went to the European Conference on Artificial Life in Brussels, and then, equipped with some more names, addresses, and invitations, I visited the University of Zürich, the University of California at Los Angeles, the Santa Fe institute, and the School of Cognitive and Computing Sciences (COGS) at the University of Sussex. I also attended a conference on Artificial Intelligence in France and a one-week summer school on Artificial Life in Spain. At COGS I found a small but active group of ALife researchers, several visiting researchers involved in ALife, and, perhaps most important, a number of Ph.D. students of Artificial Life. Here, a part of the ALife community was also observable in periods between the international conferences. I was generously invited back, and in January 1994 I started my 8 month long fieldwork at COGS.

School of Cognitive and Computing Sciences (COGS)

The interdisciplinarity of Artificial Life research is reflected in the way the University of Sussex is organised. The university, as well as the campus, is divided into interdisciplinary schools. These schools are concerned with various topics. People can belong both to a discipline and to a school, but the actual buildings are schools, not faculties. Thus people from one discipline can belong to different schools. For example, there are psychologists at least at three different schools, one of them COGS. COGS has a particularly interdisciplinary orientation: it houses people both from the sciences and the arts.(3) This combination is reflected in the name of the school; people either study something that has to do with computing, that is, they are computer scientists with a mathematical or natural-scientific background, or they study something that has to do with cognition - "thinking" or "mind". That is, they are interested in what is known as philosophy of mind and are philosophers or psychologists. Most people do both. They study both computing and cognition, and particularly the relation between these two. This endeavour is known as Artificial Intelligence or Cognitive Science. It is characterised by a combination of mechanistic/formalistic methods and perspectives, and by an interest in the philosophy of mind. This double commitment of Artificial Intelligence research is symbolised in the acronym "COGS", which has associations with both "cognition" and the mechanics of cogwheels (a cogwheel has "cogs" around its rim).

During my fieldwork there were about 60 people employed at COGS, including researchers and office personnel, about the same number of D.Phil. students and a couple of hundred undergraduates. The core of the ALife group was the evolutionary robotics group, made up of three researchers; Dave Cliff, Inman Harvey and Phil Husbands. About four or five other researchers and between 10 to 20 Ph.D. students were more or less involved in Artificial Life research.

The members of this group of ALifers had different academic backgrounds, including physics, electrical engineering, philosophy, psychology, biology, and computer science. Depending on the context of relevance the same researcher could be studying artificial intelligence, cognitive science, computer science or ALife, and they could call themselves computer scientists, cognitive scientists, ALifers, or philosophers. To complicate the picture further; ALifers at COGS sometimes call their science Simulation of Adaptive Behaviour (for short: SAB). Practitioners of this study are part of the SAB-community. This "community" meet every second year, at a conference series named SAB90, SAB92, SAB94, and so forth. Both with respect to the content of these conferences and to the people attending them, these conferences have a lot in common with the ALife conferences. We will return to what "simulation of adaptive behaviour" means later. I mention this scientific sub-field here to illustrate the large variety of disciplines and research fields that make up the ALife group at COGS.

People at COGS have a relaxed attitude towards disciplinary boundaries. This allows for an open playfulness with ideas and positions, researchers can hold a position and defend it without always having to defend their own personal and/or disciplinary identity. I think this playfulness and interdisciplinarity is one of the most important reasons why ALife research has become such a big thing at COGS. (The playfulness, of course, takes place within certain premises or taken for granted frames that define research at COGS. I will later return to both some of the premises and some of the playfulness of the scientific practice at COGS.)

In this thesis I will refer to the people that do ALife research at COGS as "the ALifers at COGS". This is partly justified because this group meet regularly to discuss ALife affairs, and sometimes, even if not very often, they call themselves ALifers. They do however also call themselves and their endeavour a lot more than "ALifers" and "ALife" - notably, perhaps, "cognitive scientists" and "cognitive science". To avoid too much confusion I will stick to the terms "ALifer" (or "ALife-researcher") and "ALife", unless other distinctions are of relevance. This means that the term "ALifer" will occur much more frequently in this thesis than it ever did at COGS, and that "the ALifer" appears to be more important in this thesis than he actually was at COGS. I hope this warning will help avoid that possible misconception.

We may talk broadly about two ALife traditions. The first tradition attempts to explain life at its most general, whereas the second attempts to explain cognition at its most general. If, as most ALifers hold, "life equals cognition", then these two endeavours should logically be identical, but there are historical differences. The first tradition of which the Santa Fe Institute is an exponent, is to a larger degree shaped by people from biology and physics (physicists have been involved in explaining life for the last 50 years), whereas the second tradition is shaped mostly by students of cognitive science, psychology and artificial intelligence. The first tradition has had a biological phenomenon or a biological system - an ecosystem, a social system or a biological cell, etc. - as its starting point. Chris Langton at Santa Fe Institute, for example, has constructed a computer program that generates a dynamical system (Langton, 1989b). The only thing this system does is to make copies of itself. It is this general aspect of life - its dynamical reproduction of itself - that Langton simulates. The second tradition has had as its starting point an intelligent or thinking individual - a human being or an animal. What do we mean by calling such an individual "intelligent"?, and what does it mean to be "intelligent"? Artificial Life at COGS belongs to the latter tradition.

I will not further discuss these differences within ALife research in general, but primarily focus on how ALife was practised and understood at COGS, even if the international conferences are also important arenas for the Artificial Life research here presented.

A central theme of this work is how ALife researchers construct their facts. By the term "construction" I mean more than a philosophical construction in the sense of a Wittgensteinian "language game", or in Berger and Luckmann's sense of people negotiating an agreement (Berger and Luckmann 1966). Philosophy is probably one of the practices which comes closest to being a pure "language game" or "social construction". (Philosophers sometimes jokingly say that they should not let their principal thoughts be affected by reality.) Artificial Life research is also philosophy - in the sense that ALifers share some interests with philosophers, and that it includes philosophers among its practitioners. But Artificial Life researchers add to this philosophy the making of machines. They program computers and build robots. In a very concrete sense they attempt to construct or engineer their machines so that these machines will exhibit what ALifers understand to be life-like or cognitive phenomena. Hence, the "constructions" I study are more than social constructions, they are technical constructions. The interactions that I focus on include more than interactions between humans (social interactions). They include interactions between humans and machines. We will see how the Artificial Life researchers and their machines relate to each other both in the laboratory, when the machines are made (chapter 5), and later, at the conference, when the machines are presented to a larger audience (chapter 6). But we will also see how ALifers talk about, understand, and negotiate meanings about their machines (chapter 4), and we will see various ways in which they reflect upon and discuss their own scientific practices (chapter 3).

There is one general theme that runs through all these different topics. This theme is, speaking generally, the boundary between subjects and objects, between humans (or life in general) and machines, between the subjective and the objective. ALifers both blurred and reproduced these boundaries in their scientific practices, and they contested and discussed them. In their practice they created methodological closeness or distance between themselves (subjects) and the machines they studied (objects). In their ontology they talked about their machines as human beings or as living systems, and they talked about human beings and living systems as machines. We might phrase these themes as questions: As practitioners of a science that studies machines in order to say something about life or cognition, and that even attempts to endow machines with life or intelligence, how do ALifers understand and contest the border between life and machines? Moreover, as a science that largely takes place within institutions of objective, rigorous, experimental science, where the relation between the questioning subject and the questioned object is characterised by objective distance, how and to what degree do ALife researchers reproduce these scientific practices when the nature they study so obviously is constructed? This thesis revolves around these questions.

The main sources that have inspired me in writing this thesis, and that I have used in order to make sense of how ALifers, in their research process, relate to their machines, and how they, in their theories and understandings, relate life (or cognition) to machines, are first, the writings of Francisco Varela and Gregory Bateson, and more recently, those of the French sociologists Bruno Latour and Michel Callon. What all these writers have in common, is to be (more or less explicit) expressions of 20th century continental phenomenology. This tradition is heavily inspired by the writings of Martin Heidegger. A brief introduction to his writings thus follows here.

Continental philosophy since Heidegger is a huge body of philosophy of which I can just scratch the surface. There is one theme in this thinking that is relevant here, namely the way in which a perceiving and acting subject, a self, is related to a world of objects or bodies (including other human beings). Edmund Husserl, Heidegger's teacher, was, with respect to his understanding of the human self, following a philosophical tradition that dates back to Descartes (Lübcke 1982:67). Husserl defended the notion of the transcendental ego. If we understand ourselves as living "in" a stream of consciousness, and if we also understand this to be a stream of ever changing intentions - we direct our intention towards this task in one moment, towards that task the next moment - then we can define the transcendental ego as follows:

The concrete "I" is the continuity in the stream of consciousness [...]. It is this fully developed, concrete I understood as a unity in the stream of intentionality, that Husserl speaks of as the transcendental ego. (Lübcke 1982:65, my translation and italics)

Heidegger rejects this unity of the self (Lübcke 1982:124). Behind the stream of events and actions, of objects perceived and reacted to, there is no unified self, no transcendental ego, or Cartesian soul. The self is its lifeworld; it is, in Dreyfus' translation of Heidegger, a "Being-in-the-World" (Dreyfus 1991). The Being-in-the-World is both one and many (but never two clearly separated parts). It is one because the world and the self cannot be separated, and it is many because it changes all the time, it is in continous flux, changing with changing contexts.

A certain relativism follows from the rejection of the transcendental ego; the world is dependent on, as it is a part of, the subject. But there also follows a certain realism because the subject is directly dependent on the world. This latter dependence can be illustrated by taking a look at one of the major philosophical problems of philosophers such as Husserl and Descartes. The problem is known as phenomenological solipsism (Lübcke 1982:66), the absolute loneliness. If there exists an I at a distance from (or as a "transcendental premise to" (1982:66)) the stream of experienced phenomena, then how can this "I" know that the stream of phenomena, including the perception of other people, is not just an illusion? How can "I" know that "I" am not totally alone in a stream of illusions referring to nothing at all "out there"? I am not going to describe how Husserl and Descartes solve this problem. The point is that both of these philosophers deal with the problem extensively (Lübcke 1982:66). In short, it is a problem to them. It is not a problem to Heidegger, it disappears as a problem because he rejects the existence of an I that can occupy the position of solipsist loneliness. The French phenomenologist Merleau-Ponty, following Heidegger, stresses the interdependencies between the self, or the subject, and the world:

The world is inseparable from the subject, but from a subject which is nothing but a project of the world, and the subject is inseparable from the world, but from a world which the subject itself projects. (Merleau-Ponty 1962:430)

Here, solipsist relativism is as absent as objectivist realism.

I am going to make a large jump in the history of ideas, to the contemporary sociology of the French sociologists Bruno Latour and Michel Callon (Latour 1987, 1988, 1993, Callon 1986, Callon and Latour 1992). We will return to their work many times in this thesis. Here I will just give a brief outline of some of their ideas. One of their main projects is, following a Heideggerian philosophy, to question the distinction between the "subjective" and the "objective", the "inner" and the "outer", the separation of humans from things. In what they have called a "network theory" they have developed a vocabulary that does take the distinction between subjects and objects, the subjective and the objective, into consideration. An "actant", for example, is more than a human actor. It may be an automatic door opener (Latour 1988), or it may be scallops in the sea (Callon 1986). In networks of humans, machines, animals, and matter in general, humans are not the only beings with agency, not the only ones to act; matter matters.

In Latour's terminology, the epistemologist and the political scientist are the academic "significant others" of the anthropologist of science (Latour 1993:143). The epistemologist is the philosopher who, thinking principally and normatively, tries to find ways in which scientists can represent Nature faithfully. The political scientist is occupied with finding ways in which politicians can represent Society faithfully. Latour's ideal "anthropologist of science", on the other hand, follows a well-trodden path in anthropology. He or she writes a holistic monograph in which one does not take the distinction between "politics", "cosmology", "religion", etc. for granted, but rather describes empirically how the subjects of study themselves make distinctions of these or other kinds. The anthropologist of science, then, should not take the distinction between politics and epistemology, Society and Nature, for granted, but rather explore how these distinctions come to be established. Latour's prescription for an anthropology of science is one that I to a large extent follow in this thesis, but with some reservations.

The particular perspective on science offered here is shaped by a central component of anthropology; fieldwork with participant observation. Rather than, for example, a historian's overview, I will present what Cole has called "a pig's eye view of the world". This, according to Cole, is "the view that researchers get when they leave the office or archive and spend time in the village mud." (Cole 1985:159) My "village" is the ALife-laboratories at COGS and the international conferences on Artificial Life. It does, however, also include written materials, as part of the everyday life of my "tribe" is the production of scientific papers. Other important sources of information are the public E-mail lists at COGS. In these lists, discussions were held in an informal, "oral" style, but with the important exception that things "said" could be perfectly captured for the future, and, for example, copied directly into an anthropological dissertation. My focus on local practices, I should note, does not mean that this thesis is not unconcerned with general philosophical interests and perspectives, only that the very general is combined with descriptions of minute details.(4)

In Latour's anthropology of science studying "politics" is as relevant as studying "epistemology". To implement this holism, Latour prescribes a "network analysis". We should follow scientists wherever they may take us - from the workbench to the committee rooms of multinational companies and national governments (Latour 1987). Such a network analysis, if it is to be combined with participant observation, can be quite demanding - Latour's own laboratory study went on for a period of two years (see Latour and Woolgar 1979). Not denying the possible usefulness of such network analysis, the present work is nevertheless more limited in scope. My fieldwork at COGS and at conferences of ALife gave me a micro perspective of the practices and theories of ALifers. Some of the limitations of my perspective can be seen, for example, in the ways in which I did not acquire insight into how ALife was financed. When the UK Science and Engineering Research Council (SERC) visited the ALife group at COGS, I was not invited to participate in the meeting. The ALifers, I felt more than was explicitly told, did not want to disturb this important meeting by making it into the public event that the presence of an observing anthropologist would have created. Moreover, if I was to understand the relationship between COGS and SERC, I would also need to know what the people from SERC thought about COGS. This would have taken me out of COGS and into the offices of SERC, a place that I did not have the time and resources to visit.

This thesis, then, is not based on a network analysis in the full sense of the term. I am, however, inspired by Latour's insistence on taking neither Nature nor Society, neither the objective nor the subjective, for granted, but to study how these distinctions of science are constructed. I will show that these distinctions are central in the scientific enterprise, and I will, from the "pig's eye view of the world", look at how and to what degree these distinctions are reproduced and challenged - both in theory and practice - in the work of a group of scientists who have made it into a point to blur the boundaries between the artificial and the natural, the "man made" and the pregiven.

My allegiance to this phenomenology-inspired sociology has certain methodological implications - as some of the ALifers that I write about were also inspired by Heideggerian, post-Cartesian phenomenology.

The reflexivity of studying science scientifically

At the beginning of my fieldwork, at the weekly ALife seminar, I gave a talk where I told the ALifers at COGS what I intended to do. One of my "informants" then told me that there were Heideggerian elements in my ideas. He copied a chapter of the book Being-in-the-World (Dreyfus 1991) for me to read.

The quotation from Merleau-Ponty above is taken from a book by Varela, Thompson, and Rosch that explores the implications of phenomenological (and Buddhist) thought for cognitive science (Varela et.al. 1993). Bateson's and Varela's more cybernetic (yet phenomenological) way to question the boundary of self has inspired me and is part of the background for this thesis. But, as we have seen, Bateson was not only an anthropologist. He was one of the first cyberneticians, and "my" Varela is the same Varela who arranged the first European Conference on Artificial Life.

I will later describe the implications that this phenomenological influence has on ALife research. Here I mention this influence in order to address some of its methodological implications. The people that anthropologists write about are always "experts" in the culture or social life that the anthropologist wants to understand. The anthropologist is almost always a rather fumbling "novice", an outsider. Some of my "informants" are experts in the philosophy that shapes the sociological perspective that I apply. That is, I have been informed by my "informants" about the theories on which this thesis is based as well as about the data I present. My "informants" are also my "colleagues". Latour and Woolgar discuss two major ways in which an anthropological (or sociological) work can be legitimated. They write:

One of the many possible schemes designed to meet criteria of validity holds that descriptions of social phenomena should be deductively derived from theoretical systems and subsequently tested against observations. In particular, it is important that testing be carried out in isolation from the circumstances in which the observations were gathered. On the other hand, it is argued that adequate descriptions can only result from an observer's prolonged acquaintance with behavioural phenomena. Descriptions are adequate, according to this perspective, in the sense that they emerge during the course of techniques such as participant observation. (Latour and Woolgar, 1979:37)

Latour and Woolgar (following Marvin Harris) call the first of these criteria etic validation (and the method applied is known as hypothetico-deductive method). The empirical testing may confirm or falsify a theory, but it is the community of fellow anthropologists who, ultimately, evaluate if a theory is valid or not. Latour and Woolgar call the second of the criteria of validity emic validation (and the method applied is hermeneutics). When it comes to this validation, "the ultimate decision about the adequacy", Latour and Woolgar write, "rests with the participants themselves." (1979:38) Lévi-Strauss and Geertz may be picked out to represent the two approaches (even if no anthropological work is based exclusively on emic or etic validation), Lévi-Strauss for his emphasis on building a grand, structuralist theory of human mind and culture (testing his theory against a large corpus of comparative data), and Geertz for his interest in approaching local meanings (through the hermeneutic interpretation of public symbols). However, as Latour and Woolgar point out, also the anthropologist who seeks emic validation "remains accountable to a community of fellow observers in the sense that they provide a check that he has correctly followed procedures for emic validation." (1979:38) That is, it is the community of anthropologists who ultimately decide if, for example, Geertz has given a valid description of how the Balinese experience their cock fights.

True emic validation, however, becomes increasingly important as our informants become a literate audience who read what is written about them. This thesis is written in English in order to make it available to ALife researchers, and I am invited back to the ALife seminar at COGS to present my work. I am, as most anthropologists, both concerned with achieving emic and etic validity. But my emic validation will not only be of a kind where my informants will check if I use their technical vocabulary correctly, they will have informed opinions on the validity of the theoretical perspective I apply. This means that we will see examples of common interests between some of my "informants" and me in this thesis. My anthropological position will necessarily be in agreement with some ALifers and in disagreement with others. Taking sides cannot be avoided in some of the controversies I write about. I will attempt to write about these controversies from a "neutral", culture-relativist position. My position, however, will be present throughout the thesis, and particularly in the introductory and final remarks. This position will, to the degree that Artificial Life researchers read this thesis, be a part of the discussions that I write about.

Before ending this introduction I would like to discuss the role that I let the ALifers (my "data", but also my "colleagues") play in this text. This, as we will see later, is of particular relevance because one of the themes of this thesis is the role that the ALifers let their computer simulations play in their science.

There are generally two major ways in which people are visible in anthropological texts, as "(Barth 1968)" or as "one informant said...". People are colleagues or informants. Let us take a look at the difference between these two.

Thirty years ago Radcliffe-Brown referred to one of his colleagues as "Professor Durkheim" (Radcliffe-Brown 1968:123). Today we seldom see the formal title in academic writings. However, we still use our colleagues' surnames, not their first names. We refer to "Durkheim", not "Emile". Following this convention we treat our colleagues as respectable members of our scientific society. This is a public society, not a private sphere (as "Emile" would have suggested), and it is a society of individual subjects. By crediting (and possibly criticising) our colleagues for what they have written, we grant them both their individuality, with their rights and duties, and their membership in our society. We might say that by using the traditional reference, "(Radcliffe-Brown 1968:123)", we make our colleagues into subjects of our society. Now, let us take a look at the other large group of people that anthropologists refer to, our "informants".

Informants often appear in our texts anonymised, as "Marc", "one ALifer", or perhaps as "17% of the population". To anonymise may be necessary for many reasons. It may in extreme cases be a matter of life or death to those involved, for example when writing about people living under oppressive regimes. Not denying its frequent necessity, I am here concerned with one effect of anonymisation which may be problematic; It removes those we write about from the social and moral sphere in which we place ourselves and our colleagues. It does not give them rights and duties as subjects of our (academic) society. So whereas the academic reference subjectifies the human being, the anonymisation does the opposite; it creates distance, it objectifies. This distance is quite visible in two of my above-mentioned examples; "one ALifer" and "17% of the population". The third example, "Marc", is more tricky. Rather than creating distance, it creates intimacy. If "Marc" is written about at length we may get familiar with "Marc", thus, calling this person by a first name enhances this familiarity and intimacy. But still, it does not include him in the same society of subjects to which "Professor Durkheim" or "(Radcliffe-Brown 1968:123)" belongs.

The ALifers I am writing about will appear in this text in both of the ways mentioned above. I will refer to their publications, for example "(Langton 1989b)", and I will refer to things they have said and done; "...one researcher talked about..." I have, many times, considered not anonymising my accounts of informal events and utterances. The first time I had to consider this was after the first interview I conducted. I told the researcher that I would, if she wanted, anonymise her. She was offended by the very suggestion. She was not afraid of standing up for what she said. Did I think that she had something to hide? She did not want to become some kind of amoral Mrs. X. Her point impressed me. Should I include my "informants" in the scientific society (of responsible individuals) to which the anthropologists belonged? This question was particularly pertinent since I needed and wanted to refer to their publications, and then I would definitely use their real names.

I have decided not to use their real names. There is a lot of me in this thesis, even in the most empirical parts. When telling stories from my fieldwork I have tried to be faithful to what "really" happened, but all storytelling includes a lot of "construction", I have created the contexts to the empirical accounts, and I have selected, during and after the fieldwork, from what is really an enormous amount of events, a tiny handful of stories to illuminate my points.

One of the ways in which scientists make a living and a career is by writing - not merely saying - things that are later referred to and quoted. If I use an ALifer's real name and a third person quotes the ALifer - who's informal talking has been made into something written by me - this quotation may say something that the ALifer him- or herself would never have wanted to publish. Using pseudonyms will remove the possibility of formally referring to the ALifers, even if those who know the people I write about will recognise them. I have used first names as pseudonyms to mark the informal contexts from which the stories I tell are taken. It would have been quite absurd to do otherwise; people at COGS, independent of academic titles, always addressed each other by first names.

However, I have also wanted to show normal academic respect for scientists' work by referring to their publications when I use them. Hence, some of the ALifers at COGS will appear in this thesis as two characters; with their real names when I discuss and refer to things that they have written and published, and with a pseudonym when I refer to events and utterances from more informal settings.(5)

Chapter 1: Working Machines, Objectivity and Experiments |

Artificial Life research attract people both from the arts and the humanities, but its basic foundation is in the sciences. It is, to a large degree, practised as a rigorous, experimental science; hypotheses should be tested by setting up an experiment, these experiments should be reproducible, the results should be statistically significant or prove their validity by enabling the production of new, working technology. This last criterion is of central importance in ALife research. Working machines(6) play a pivotal role in the production of legitimate scientific results. People at COGS - ALifers as well as other researchers and students - ask for results, and by this they mean a working computer or robot which does something that can be classified as an intelligent or life-like behaviour. One of the early accomplishments of the first ALife robot at COGS was to find the centre of the small room in which it moved. Researchers and students who were critical of the ALife projects frequently asked questions of this kind: "Now, it did room-centring, but will it be able to do more advanced things, will it scale up?" The future of ALife research at COGS is linked to the question of whether the robots and computer programs of ALife will perform "more advanced tasks" - of whether they will "scale up" from, for example, room-centring to something which is more clearly an intelligent or life-like behaviour. The ALifers did not contest this frame of reference. To "scale it up" was one of the explicit aims of their research.

The aim of this chapter is first to situate Artificial Life within the larger context of science in general, and then to give a description of this general context. This description will be given from the particular point of view known as social studies of science. Hence, it will be as much a description of this point of view as of science itself.

To indicate Artificial Life research's dependency on working machines I will refer to this science as what Bruno Latour calls a technoscience (Latour 1987:174). This word denotes the same scientific disciplines as "experimental science" does, thus encompassing the natural sciences and some of the social sciences (such as economics and parts of psychology). But "technoscience", giving associations to "technology" and the Greek techne (skills), is also meant to refer to the practical and social contexts, the work, the skills, and the machines, of these sciences and scientists. "I will use the word technoscience", Bruno Latour writes, "to describe all the elements tied to the scientific contents no matter how dirty, unexpected or foreign they seem, ..." (1978:174). I also use "technoscience" rather than just "science" to establish distance between anthropology, "my" science, and Artificial Life, the science that I study. Social anthropology may be a science (though this is sometimes questioned), but it is not a technoscience.

To follow Latour and describe "... all the elements tied to the scientific contents..." might mean studying huge networks involving everything from laboratories to, say, weather satellites, global ecology, and international politics. In describing such networks there are many possible elements of relevance, and there are many perspectives from which they may be described. One of the central elements that I have focused on in studying ALife research is the role of the working machines and the results they both produce and are instances of themselves. But before discussing this in more detail, I will take a general look at some of the ways in which technoscience can be studied from a social science point of view. I will also consider the motivation and background for my own perspective.

During the last 25 years or so a new academic field has developed. It is known as the field of Science, Technology and Society (STS) or as Social Studies of Science (SSS). This academic field is made up of scholars from disciplines such as philosophy, anthropology, sociology, and history. If we should try to find one common theme in these studies, then the rejection of objectivism is probably a good candidate. The aim of these studies is, in different ways, to explain and describe the construction of scientific facts by embedding these processes of construction in social, cultural, and/or technological contexts. One of the pioneers in this field was the philosopher Thomas Kuhn. In The Structure of Scientific Revolutions (1962) he discussed the influence of social factors - such as changes in generations - on scientific changes. Kuhn, discussing the natural sciences and mainly physics, claimed that these disciplines did not develop gradually, but that they changed in leaps, as one paradigm replaced another.

In the 1970's sociologists, anthropologists, and others began to study the natural sciences empirically. These studies can broadly be divided into two main categories. The first has been called laboratory studies (Knorr-Cetina 1995). I call the second category discipline studies. Based on qualitative fieldwork, the laboratory studies have focused on how scientific facts are constructed. These studies have focused on the diverse practices - social, technical and conceptual - of life in laboratories, in international networks of laboratories, and in networks that exceed what is strictly "science" and include political, commercial and other interests (Latour 1987).

Harry Collins (1975) did pioneering work showing that the replication of facts in physics involved a replication of certain skilful practices. In 1979 the first technoscientific monograph, Latour & Woolgar's Laboratory Life, The Construction of Scientific Facts (1979), was published. Karin Knorr-Cetina's The Manufacture of Knowledge, An Essay on the Constructivist and Contextual Nature of Science (1981) followed two years later. Both of these works are based on participant observation in laboratories (a neuroendocrinology(7) lab and a plant-protein lab respectively). The books however, are not mainly about the particularities of these biological labs and traditions. They address the practice of technoscience in general.

The general focus of both of these books is exemplified in Latour and Woolgar's account of their first meeting with their field. This account is not written as the story of one concrete ethnographer (Latour) visiting one particular place. It is the story of "An Anthropologist [who] Visits the Laboratory" (title of Chapter 2). The style is general, and the actual lab Latour visited only figures as an example of how such a meeting might take place. For example, they write: "Our anthropological observer is thus confronted with a strange tribe who spend the greatest part of their day coding, marking, altering, correcting, reading, and writing." (1979:49) Note however that even if the focus of this book is general, the method is a study of the particular. In order to explore the general notion that a fact is socially and technically constructed, Latour and Woolgar undertake an extremely detailed analysis of the events that took place in the construction of a single piece of fact, the existence of a particular hormone, that came to be known as the TRF(H) molecule. We learn nothing about the position of this hormone inside the human body, or its role in a particular, situated understanding of the human body from reading Latour and Woolgar. What we learn is the position of the hormone relative to the technical equipment in the lab and to a network of scientific articles that refer to each other. The TRF(H) molecule is in Latour and Woolgar's account not part of a human body or an understanding of the human body, it is part of a technical and social network of laboratory equipment and researchers.

In contrast to these sociological accounts of scientific practices, we find the "discipline studies". Rather than studying "the laboratory" and the skilled, social practice of its inhabitants, these studies probe the actual content of specific scientific traditions. They have a lot in common with studies in the history of consciousness or history of ideas. Such historical studies have shown, for example, how Wittgenstein's thoughts were influenced by the late 19th century Habsburg Vienna (Janik and Toulmin 1973): "Regarded as documents in logic and the philosophy of language," Janik and Toulmin write, "the Tractatus and the Philosophical Investigations stand - and will continue to stand - on their own feet. Regarded as solutions to intellectual problems, by contrast, the arguments of Ludwig Wittgenstein, like those of any other philosopher, are, and will remain, fully intelligible only when related to those elements in their historical and cultural background which formed integral parts of their original Problemstellung." (1973:32)

The discipline studies perform the same kind of contextualisation with present day scientists and sciences that Janik and Toulmin did of Wittgenstein. They give cultural, and culture critical interpretations of disciplines.

Donna Haraway is one of the influential writers in the cultural critical tradition of science studies. In her earlier work she focused, in her words, "on the biopolitical narratives of the sciences of monkeys and apes." (Haraway 1991:2) Her own political position is explicitly present in her works: "Once upon a time, in the 1970s, the author was a proper, US socialist-feminist, white, female, hominid biologist, who became a historian of science to write about modern Western accounts of monkeys, apes, and women." (1991:1) In her study of how biologists understand the large apes, she takes the role of the cultural context further than Janik and Toulmin, who looked only for the motivations behind Wittgenstein's work. Haraway looks at how the very content of science is shaped by social factors:

People like to look at animals, even to learn from them about human beings and human society. People in the twentieth century have been no exception. We find the themes of modern America reflected in detail in the bodies and lives of animals. We polish an animal mirror to look for ourselves. (1991:21)

In her Cyborg Manifesto (1991 [1989]) Haraway invents the feministic cybernetic organism, the Cyborg. In contrast to what she sees as feminist-socialist technophobia, this is an appropriation - a rewriting or contesting rather than a total rejection - of what she sees as the dominant image of the high-tech-man, the integrated man and machine, archetypically seen as the independent astronaut moving freely in space. Haraway, as I understand her, wants to use the image of the cyborg to draw our attention to "leaky distinctions" (1991:152) between humans and animals, between organisms and machines and between the physical and the non-physical. In line with Marxist thought she is fighting the naturalisation of social inequalities, and she sees in the Cyborg the possibility to rework the distinction between Nature and Culture, and hence the distinction between civilised and primitives, man and woman, etc., "the one can no longer be the resource for appropriation or incorporation of the other." (1991:151) They will possibly be parts of messy Cyborg-networks rather than parts subsumed under the hierarchical wholes of highly militarised, male dominant economies. In drawing these alternative images (of which I have just given a sketch) Haraway is openly utopian: "How might an appreciation of the constructed, artifactual, historically contingent nature of simians, cyborgs, and women lead from an impossible but all too present reality to a possible but all too absent elsewhere? As monsters [that is, as liminal objects that demonstrate], can we demonstrate another order of signification? Cyborgs for earthly survival!" (Haraway 1991:4)

In the high energy physics monograph by anthropologist Sharon Traweek - Beamtimes and Lifetimes, The World of High Energy Physicists (1988) - we find a combination of laboratory and discipline studies. Traweek gives us a description, "As thick as it could be," she writes (1988:162), of an American group of high energy physicists (doing so partly by comparing this group to a Japanese group). In her book we become acquainted with a group of American men who are organised into a particular social structure, a structure with its own academic cycles of reproduction and its own hierarchy. These men have specific identities, determined partly by their ancestral history (their professional forefathers, reproduced in textbooks by pictures showing a man, from the waist up, and dressed in suit, sometimes with a tie, other times more casually), partly by their huge and mythologically important machines (like SLAC, the Stanford Linear Accelerator), and partly by their national differences (as they understand these differences themselves).

In the three works mentioned above (Janik and Toulmin's, Haraway's, and Traweek's) the larger culture of which the scientists are a part plays an important role. But the role of this cultural context is different in each of the three works. To Janik and Toulmin, Wittgenstein's Vienna was a context that motivated Wittgenstein's writing, but it did not explain the content of his works. They did not want to reduce the logic of Tractatus to social life of 19th century Habsburg Vienna. Haraway, in her writings on ape research, describes a more profound cultural influence on science. The very content of their research is shaped by "modern America" (Haraway 1991:21). Traweek follows Janik and Toulmin rather than Haraway. The culture that she describes, a culture which is specific both to the physicists and to American culture as a whole, shapes the "laboratory life" of the physicists, but not demonstrably the content of their physics. Traweek does not try to explain electrons, photons and quarks as determined by the culture of physicists. She leaves physics to the physicists.

These three positions may to some degree reflect different conceptions of what "culture" determines. To look for such theoretical differences is beyond the scope of this presentation. However, it is quite clear that these differences are dependent on the scientific disciplines studied: It is much easier for biologists to read human society into a group of interacting gorillas than it is for physicists to do the same with interactions between electrons. Having had this look at the differences between these three works, I would like to restate their similarity; all three look at how the culture and society of scientists shape their science.

The present work on Artificial Life research is, on the one hand, a laboratory study. This is a result of the method - the anthropological fieldwork - on which it is based. I did my fieldwork at one particular lab, COGS, and at a number of conferences on Artificial Life and Artificial Intelligence. What I want to depict is the way that Artificial Life was practised at this lab and at these conferences.

On the other hand, I am interested in the particularities of Artificial Life as a discipline, not only as a means of generating general sociological theory (as Latour Woolgar, and Knorr-Cetina do in their laboratory studies). The specific content of the discipline of Artificial Life is therefore part of the subject matter of this thesis.

Hence, the present work is situated between the two main categories I outlined above. It is a laboratory study, but, rather than describing scientific practice in general, it attempts to describe how the technoscience called Artificial Life is constructed. It is also a study of an academic discipline, ALife, but it aims at explaining this direction of thought by locating it in the social and technical context of laboratory life rather than in the cultural context of the larger society.

In relating the content of ALife research to the practices in laboratories and conferences of ALife, I am not ready to follow Traweek and leave physics to the physicists (or ALife to the ALifers). In this thesis I relate the specific content of ALife at COGS to the specific practice of ALife at COGS.

It is in the meeting point between the scientific content of ALife, the particular "tradition of thought" (Varela and Bourgine 1992:xi), and the practice of ALife, that the working machines with which I introduced this chapter play a pivotal role. We will see that they occupy a central position throughout this thesis. Artificial Life researchers both made machines and studied them. I now turn to an initial description of these machines.

Artificial Life researchers mostly study things that go on inside computers. It can therefore be useful to compare Artificial Life with the endeavour that is known as Computer Science. In the Oxford Dictionary the latter is defined as "the branch of knowledge that deals with the construction, operation, programming, and applications of computers." Computer scientists make computers, and they study them in order to make them better. Sometimes Artificial Life research fits such a definition. This is the case when researchers, inspired by biological ideas, attempt to make a program that solves a specific problem better than other programs do. For example, inspired by the Darwinian notion of evolution by natural selection ALifers made so called Genetic Algorithms. They then used this algorithm to evolve a controller system - or "brain" (here the quotation marks are theirs) - for a robot.

More often, however, ALifers did more than engineering useful artefacts. They made biologically inspired programs that produced large sets of phenomena, and then they studied these worlds of phenomena as surrogates, alternative "natures" or, in fact, as "artificial lives". These artificial lives were studied in order to be able to say something general about life or cognition. That is, they made what they called a simulation.

If you are a human being, to simulate means to pretend, to communicate signs which refer to something other than what you are literally. A person may simulate sick while really feeling okay. The person signifies sickness. To treat a computer program as a simulation means to treat it as a sign or a system of signs that refers to something that it is not. When a computer program is understood as a simulation it has a referent outside itself. So, when ALifers made simulations they tried to understand something more than the program that they made. They could, for example, use what they learned from running genetic algorithms to say something about biological evolution.

In order to talk about simulations I need to be able to talk about the referent of simulations. I first thought about using ALife researcher Chris Langton's term life-as-we-know-it (Langton 1989b:1). In his definition of Artificial Life, Langton defines life-as-we-know-it by contrast to life-as-it-could-be:

By extending the empirical foundation upon which biology is based beyond the carbon-chain life that has evolved on Earth, Artificial Life can contribute to theoretical biology by locating life-as-we-know-it within the larger picture of life-as-it-could-be. (Langton 1989b:1)

There is however a problem about the "we" in this phrase. When ALifers formally (in scientific papers, etc.) relate their simulation to life-as-we-know-it, this life is often life as some other scientific discipline knows it. That discipline is usually biology, but it could also be cognitive psychology or a social science (like economics). Hence, the "we" in life-as-we-know-it would be biologists, psychologists, etc. The referent would be life-as-biologists (etc.)-know-it. In more informal settings life-as-we-know-it could be the life of the researcher. A researcher could use metaphors from his daily life in order to explain some simulated phenomena. Here the referent is "life-as-I-know-it". When ALifers made robots, they often first made a computer simulation of a robot in an environment. They developed a virtual robot in virtual space, before they made a real one. In these cases life-as-we-know-it - the referent of the simulation - is another machine.

Not quite satisfied with life-as-we-know-it (as I wanted to use it), I came across a term in Sherry Turkle's last book about young Internet users (Turkle 1996). One of her informants simply spoke about the life he lived outside the many Internet communities to which he belonged as "RL" - real life. I have adopted his RL, although I will spell it out as "Real Life". Real Life is not meant to refer to some kind of objective reality. It is always the Real Life of someone, and it is relative to Artificial Life (here not understood as the discipline, but as the "life" in the computer simulations). I will return to the Real Life references of Artificial Life in later chapters, here I will turn to another aspect of simulations, namely their role in experiments.

One element that profoundly shapes Artificial Life research is the increasing speed and complexity of computers. They can run more and more complex programs. ALife researchers utilise this speed and power by making simulations that produce unpredictable phenomena. A program that mimics Darwinian evolution, a Genetic Algorithm, for example, may simulate one or more populations consisting of, say, 100 individuals (often talked about as animats, from "animal" and "automata"). These populations may reproduce themselves through, say, 1000 generations. The animats may interact with each other and with a simulated environment. To understand what is going on in such a process is not easy, even for the one who has designed the simulation; it has to be studied. The virtual domain of phenomena that a simulation creates and that the ALifers study is often talked about as a world. There are a couple of features of these worlds that made them into appropriate objects of a technoscientific study. First, they either produced results or they did not. There is always room for doubt as to what may count as results, but there is also something "objective" about them; we cannot - in Berger and Luckmann's sense of "reality" - "wish them away" (Berger and Luckmann, 1966:13). Related to this objectivity is the fact that these worlds exist behind computer screens. That is, they tend to exist at a distance from the observer. They are "out there", behind a piece of glass, behind a computer screen.

The possibility of producing this objectivity, of creating distance between the observer and the observed, is not only an aspect of ALife research, it is one of the central elements in all technoscience. Therefore, before I look at how the distance between the ALifers and the virtual worlds they study is established, and to what degree this distance and objectivity are established in ALife research, I will turn to a general discussion of the distance and objectivity of technoscience. This discussion will of course be "situated", it will present a particular perspective on the matter. The perspective I will present is, of course, first and foremost my own, but it is, as we will see, inspired by Michel Callon and Bruno Latour.

The machine and the trustworthy witness

In technoscience the scientist is commonly understood to be a person endowed with theories, feelings, interests, and intuitions; in short, with human subjectivity. As such, he is a member of the scientific community, or, we might say, a member of the Society of Subjects. The objects of study are understood to exist independently of this subjectivity. They are parts of what I call the Nature of Objects. The objectivity of technoscience, then, is based on a separation of the Subject and the Object and of the Society and Nature to which these belong. To understand "objectivity", we also have to understand "subjectivity". Moreover, this separation is based on the construction of particular machines, the experimental apparatus. These machines presumably provide the necessary distance between the one who studies and the thing studied.

Many Western philosophers have argued that there exists an objective (that is, subject-independent) reality, and that human beings through science can achieve an understanding of this reality. These philosophies can be classified under the "-ism" known as "objectivism". In addition to these philosophical speculations people might try to establish an objective practice. People can, in practice, try to establish a separation of subjects and objects. In the following I will not discuss the objectivism of various philosophers, I will discuss how technoscientific objectivity (to the degree that it exists) has become an established practice of laboratory life.

I will do this by telling a story, a sort of "origin myth" of technoscience, a story that - even if it is just one of many building blocks - metonymically may stand for the larger whole of which it is a part. The story is based on the books of Shapin and Schaffer (1985) and Latour (1993), and it is about the experimental philosopher Robert Boyle, his air pump, and his opponent Thomas Hobbes.

In the 17th century there was a natural-philosophical debate between the vacuists and the plenists. The former argued, philosophically and principally, that there could be space without matter - vacuum. The latter denied this. Space is a property of bodies. Without bodies of some sort there cannot be a space (and seemingly empty space is filled with so called ether.)

Robert Boyle (1627-1691) made a major contribution to this debate. He designed a highly sophisticated mechanism; an air pump and a glass globe. The manually operated air pump could suck the air out of the glass globe, and objects inside the glass globe could be manipulated without opening it. He then experimentally produced a vacuum. But he also refrained from taking side in the debate between plenists and vacuists. Shapin and Schaffer write: "By 'vacuum' Boyle declared, 'I understand not a space, wherein there is no body at all, but such that is either altogether, or almost totally devoid of air'." (Shapin and Schaffer 1985:46) We might say that Boyle recontextualised the problem: Instead of arguing principally for or against "metaphysical" vacuum, he argued empirically by referring to the experiment. He defined an experimental context for the notion of vacuum, parallel to the philosophical debate. Furthermore, rather than appealing to the authority of logical, stringent thought, he invited trustworthy members of his community to witness the production of a vacuum under highly controlled circumstances. Hence, in this process Boyle did more than argue for the existence of experimental vacuum, he also argued for a new authority, made up of the machine and the trustworthy witness. The machine could reproduce the same results time after time, and the machine itself could be reproduced (within few years there were 6 "high-tech" air pumps in Europe). The results of these machines were obviously "there", they could not be "wished away" (cf. Berger and Luckmann 1966:13). Boyle argued for the authority of the opinion of the witness by referring to an English legal act, Clarendon's 116 Treason Act, where it was stated that, in a trial, two witnesses were necessary to convict a suspect (Shapin and Schaffer 1985:327). The opinions of these witnesses could be trusted because they were English Gentlemen, they were trustworthy, and they witnessed a phenomenon that they did not create.

In this double process, making an experiment and invoking a number of witnesses, Boyle (and his followers) performed more than "pure science". In arguing for a new source of legitimation - the trustworthy witness - he performed a political act. This can be seen most vividly in Boyle's interaction with one of his philosopher colleagues, Thomas Hobbes. In civil war-ridden England, Hobbes wanted to create one authority, one Power, under which all people would be united. Protestants and Catholics had been fighting each other quite a while, so this authority should not be religious, but a secular Republic ruled by the Sovereign, a Leviathan. In a social contract the subjects of the Sovereign would give Him the absolute authority to represent them. In presenting his plan Hobbes argued against Protestants' "free interpretation" of the Bible, using what he saw as a mathematical demonstration. This demonstration was inspired by Euclidean geometry, where one makes logical deductions from a set of basic, self-evident premises. These logical demonstrations could not be refuted (if one accepted the premises) and were not dependent on ambiguous sensory experiences. Everything - Man, Nature, God, and the Catholic Church - could be united in one, mathematical and geometrical universe. Hobbes thus argued mathematically for his Leviathan.

However, Hobbes was more than one of the first political scientists, he also argued mathematically (that is, principally and logically) against the possibility of the existence of vacuum. But when Boyle made his air pump, Hobbes did not set up a counter experiment (as physicists might have done today), he denied the very legitimacy of the whole experiment, including the use of witnesses. Hobbes not only argued against the existence of vacuum, he argued against a new authority - the experiment and the opinion of the observers - who would threaten the absolute authority of the Sovereign. The opinions of Boyle's witnesses claimed authority not because they followed logically from a set of first principles, but because they were testimonies of Nature (just as the opinions of the Protestants claimed authority as testimonies of the will of God). So when Boyle and his followers founded the first community for promotion of "experimental natural philosophy", the Royal Society of London, Hobbes wrote to the king, to warn against its activities. Gradually Hobbes got his secular state. Boyle & Co, however, also got their Royal Society, and the first modern scientific authority, based on the machine and the trustworthy witness, was established. This institution discusses matters of Nature independent of the State, the Society or the Subject. Thus, two major, separated domains of authority were constituted; one political, that was in charge of the laws governing the subjects, and one scientific, that administered the laws regulating the objects, the natural laws. The politician became the legitimate representative of Society, the scientist became the authorised representative of Nature.

There is a strange duality in what Boyle did. On the one hand he created a situation - the experiment - where facts could be fabricated by humans in a highly controlled way. On the other hand he invoked the notion of the trustworthy witness, an English gentleman in Boyle's time, who, like the witness of a crime, was observing a phenomenon that he had no responsibility for creating, but was merely describing.

Hence, on the one hand we may ask: Can the vacuum that Boyle produced be reduced to a Nature existing independently of human beings? The answer is clearly no. The whole experiment is a highly specialised and localised social and technical arrangement. It required highly advanced technical equipment that could only be made by the most skilful craftsmen of the time, and it was dependent on an institution of authority, the Royal Society, with its trustworthy witnesses. On the other hand we may ask: Can the vacuum be reduced to a Social Construction? Again the answer is no. It was precisely Boyle's point that whatever metaphysical arguments the plenists (or the vacuists) made about ether or the principal possibility of having space without body, the glass globe sucked empty of air is there to be observed. About this Bruno Latour writes:

Ironically, the key question of the constructivists - are facts thoroughly constructed in the laboratory? - is precisely the question that Boyle raised and resolved. Yes, the facts are indeed constructed in the new installation of the laboratory and through the artificial intermediary of the air pump. The level does descend in the Torricelli tube that has been inserted into the transparent enclosure of a pump operated by breathless technicians. 'Les faits sont faits': "Facts are fabricated," as Gaston Bachelard would say. But are facts that have been constructed by man artefactual for that reason? No: for Boyle, just like Hobbes, extends God's 'constructivism' to man. God knows things because He creates them. We know the nature of the facts because we have developed them in circumstances that are completely under our control. Our weakness becomes a strength, provided that we limit our knowledge to the instrumentalized nature of the facts and leave aside the interpretation of causes. Once again, Boyle turns a flaw - we produce only matters of fact that are created in laboratories and have only local value - into a decisive advantage: these facts will never be modified, whatever may happen elsewhere in theory, metaphysics, religion, politics or logic. (Latour 1993:18, references deleted)

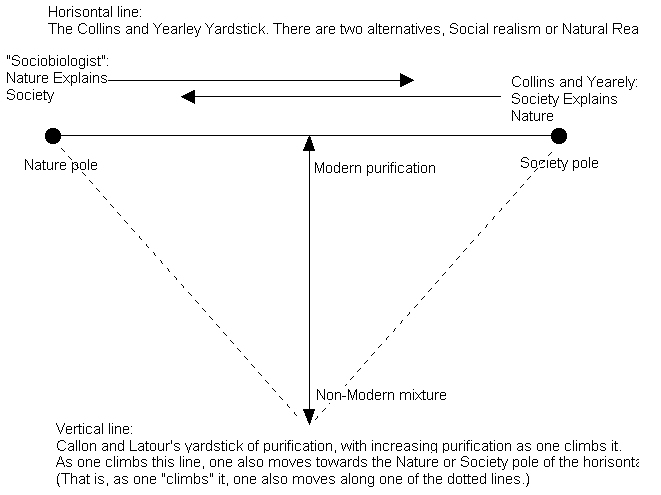

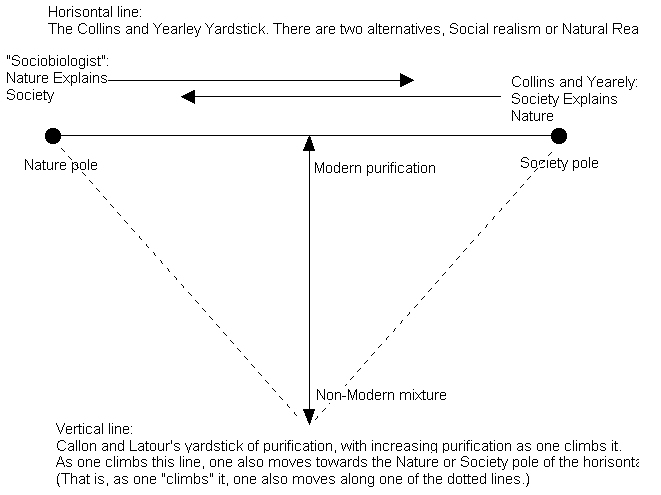

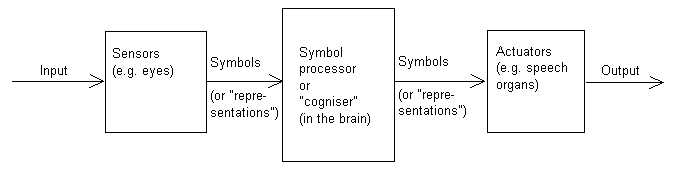

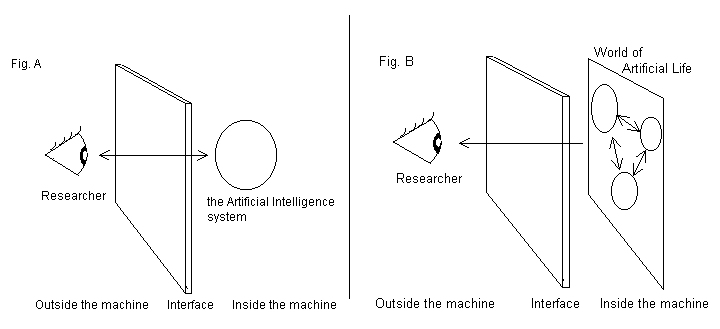

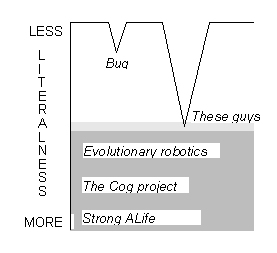

Thus the authority of technoscience is - according to Shapin, Schaffer, and Latour - not solely based on the logic of geometrical, Euclidean thought, but on a description by a trustworthy witness of an event that - even if it only occurs in specially designed circumstances - the witness has not himself created. The bird that demonstrates "a space [...] devoid of air" by suffocating in the glass globe, or the feather that demonstrates the lack of Hobbes' "ether wind" by falling right down, cannot be reduced to "ideas" or "social relations". What is constructed - by humans - in the experiment is, paradoxically, a sort of independence from human factors. It is this independence, or objectivity, which makes it possible to see the observer as a distanced witness and not an accomplice in the event.